I wanted to share my working configuration for running the Autopilot addon with a local Ollama instance instead of OpenAI API. This might be useful for those who want to use local LLMs for privacy or cost reasons.

Environment:

-

Cockpit CMS Pro with Autopilot addon

-

Ollama server running locally

Step 1: Configure config/config.php

Add the following to your config file:

'autopilot' => [

'openAI' => [

'apiKey' => 'ollama',

'chatModel' => 'gemma3:latest',

'useTools' => false,

'baseUri'=> 'http://your-ollama-server-ip:port/v1'

]

],

Notes:

-

apiKeycan be any non-empty string (Ollama doesn’t require authentication) -

chatModel- I’m usinggemma3:latest, but you can use any model available in your Ollama instance -

useToolsmust be set tofalse(tool calling is not supported by most local models) -

baseUri- point this to your Ollama server’s OpenAI-compatible endpoint

Step 2: Modify addons/Autopilot/Controller/Autopilot.php

The addon has useTools hardcoded to true by default. To make it configurable, modify line 52:

Before:

$useTools = $this->param('useTools', true);

After:

$configUseTools = $this->app->retrieve('autopilot/openAI/useTools', true);

$useTools = $this->param('useTools', $configUseTools);

This change allows the useTools setting from the config file to be respected, while still maintaining backward compatibility (defaults to true if not specified).

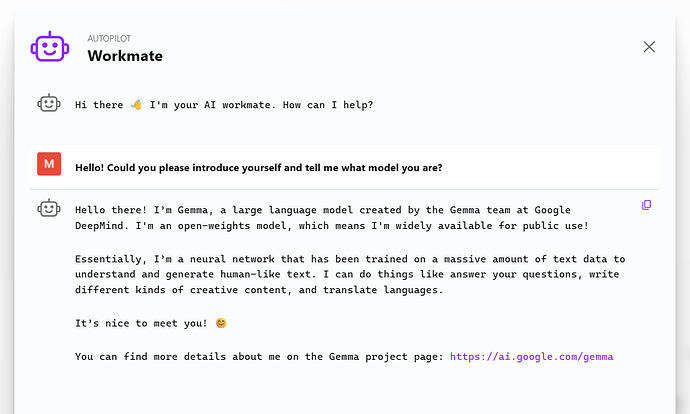

Result: The Autopilot addon now works with my local Ollama instance running Gemma 3. Response times depend on your hardware, but it’s a great solution for local development or privacy-focused deployments.